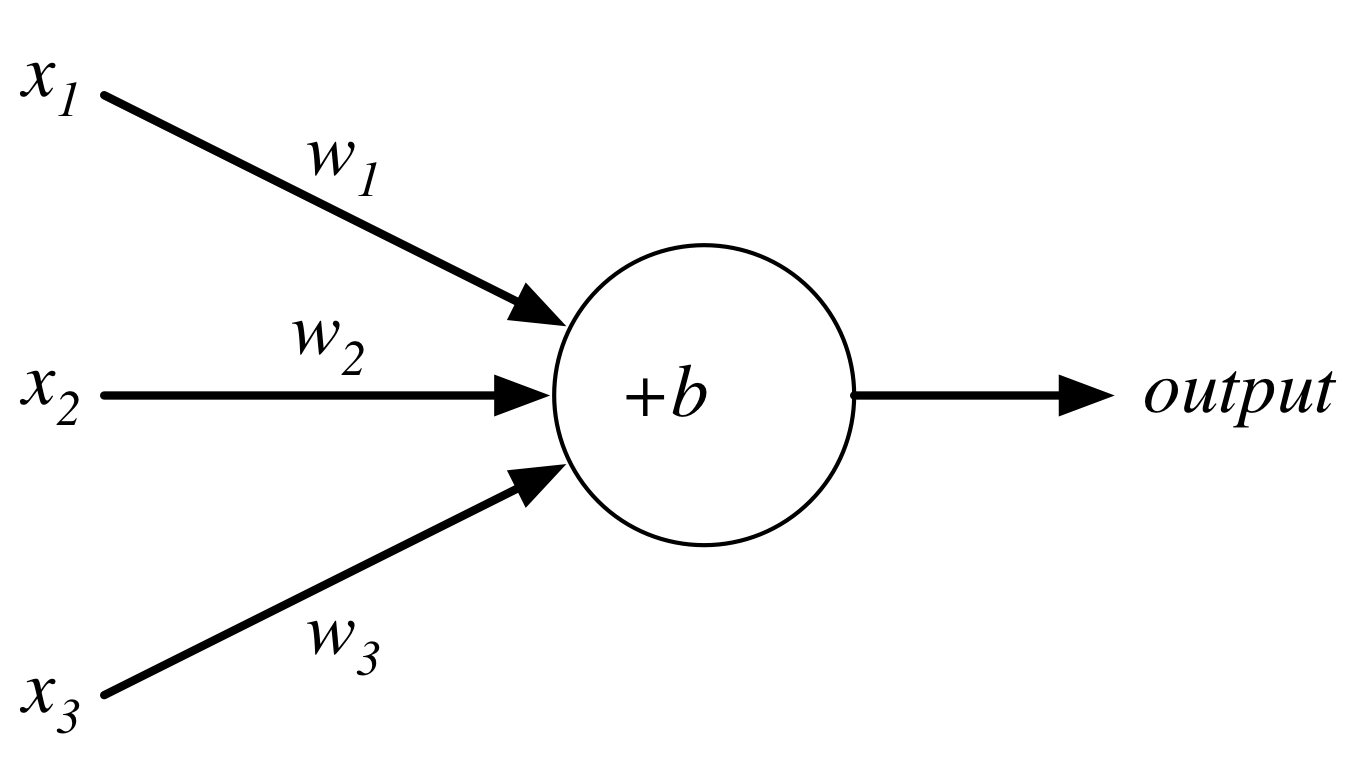

Perceptron¶

Perceptron¶

Consider the following perceptron:

If $x$ takes on only binary values, what are the possible outputs?

Neurons¶

Instead of a binary output, we set the output to the result of an activation function $\sigma$

$$output = \sigma(w\cdot x + b)$$Activation Functions: Step (Perceptron)¶

Activation Functions: Sigmoid (Logistic)¶

Activation Functions: tanh¶

Activation Functions: ReLU¶

Rectified Linear Unit: $\sigma(z) = \max(0,z)$

Networks¶

Terminology alert: networks of neurons are sometimes called multilayer perceptrons, despite not using the step function.

Networks¶

The number of input neurons corresponds to the number of features.

The number of input neurons corresponds to the number of features.

The number of output neurons corresponds to the number of label classes. For binary classification, it is common to have two output nodes.

Layers are typically fully connected.

Neural Networks¶

The universal approximation theorem says that, if some reasonable assumptions are made, a feedforward neural network with a finite number of nodes can approximate any continuous function to within a given error $\epsilon$ over a bounded input domain.

The theorem says nothing about the design (number of nodes/layers) of such a network.

The theorem says nothing about the learnability of the weights of such a network.

These are open theoretical questions.

Given a network design, how are we going to learn weights for the neurons?

Stochastic Gradient Descent¶

Randomly select $m$ training examples $X_j$ and compute the gradient of the loss function ($L$). Update weights and biases with a given learning rate $\eta$. $$ w_k' = w_k-\frac{\eta}{m}\sum_j^m \frac{\partial L_{X_j}}{\partial w_k}$$ $$b_l' = b_l-\frac{\eta}{m} \sum_j^m \frac{\partial L_{X_j}}{\partial b_l} $$

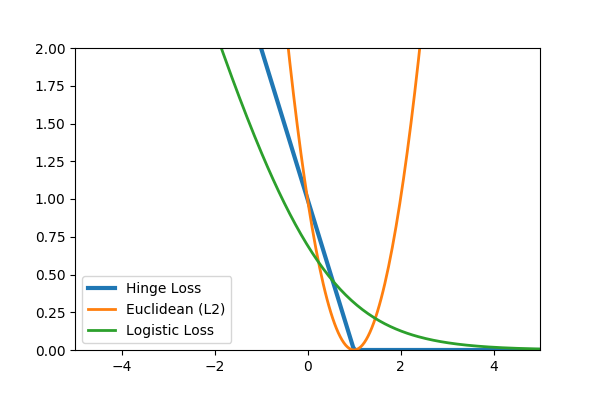

Common loss functions: logistic, hinge, cross entropy, euclidean

Backpropagation¶

Backpropagation is an efficient algorithm for computing the partial derivatives needed by the gradient descent update rule. For a training example $x$ and loss function $L$ in a network with $N$ layers:

Feedforward. For each layer $l$ compute $$a^{l} = \sigma(z^{l})$$ where $z$ is the weighted input and $a$ is the activation induced by $x$ (these are vectors representing all nodes of layer $l$).

Compute output error $$\delta^{N} = \nabla_a L \odot \sigma'(z^N)$$ where $ \nabla_a L_j = \partial L / \partial a^N_j$, the gradient of the loss with respect to the output activations. $\odot$ is the elementwise product.

Backpropagate the error $$\delta^{l} = ((w^{l+1})^T \delta^{l+1}) \odot \sigma'(z^{l})$$

Calculate gradients $$\frac{\partial L}{\partial w^l_{jk}} = a^{l-1}_k \delta^l_j \text{ and } \frac{\partial L}{\partial b^l_j} = \delta^l_j$$

Backpropagation as the Chain Rule¶

$$\frac{\partial L}{\partial a^l} \cdot \frac{\partial a^l}{\partial z^l} \cdot \frac{\partial z^l}{\partial a^{l-1}} \cdot \frac{\partial a^{l-1}}{\partial z^{l-1}} \cdot \frac{\partial z^{l-1}}{\partial a^{l-2}} \cdots \frac{\partial a^{1}}{\partial z^{l}} \cdot \frac{\partial z^{l}}{\partial x} $$

Deep Learning¶

A deep network is not more powerful (recall can approximate any function with a single layer), but may be more concise - can approximate some functions with many fewer nodes.

Convolution Filters¶

A filter applies a convolution kernel to an image.

The kernel is represented by an $n$x$n$ matrix where the target pixel is in the center.

The output of the filter is the sum of the products of the matrix elements with the corresponding pixels.

Examples from Wikipedia):

|

|

|

| Identity | Blur | Edge Detection |

Feature Maps¶

We can think of a kernel as identifying a feature in an image and the resulting image as a feature map that has high values (white) where the feature is present and low values (black) elsewhere.

Feature maps retain the spatial relationship between features present in the original image.

Convolutional Layers¶

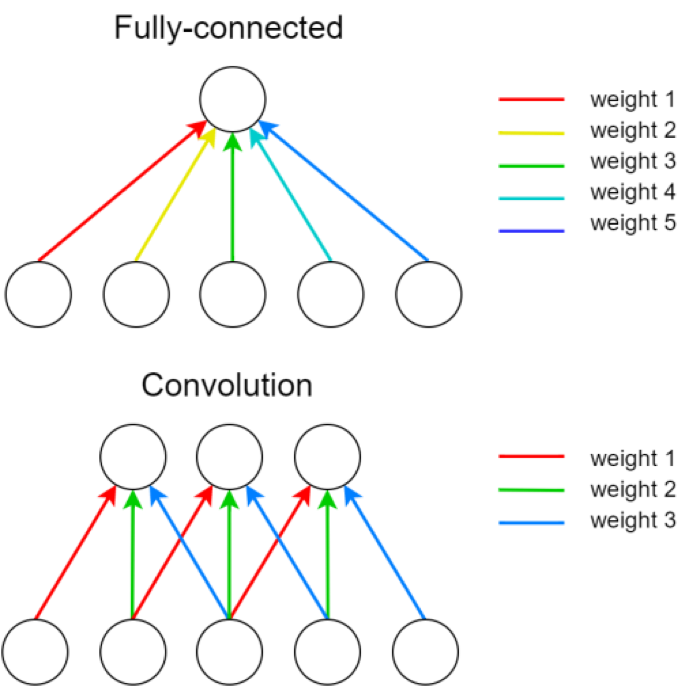

A single kernel is applied across the input. For each output feature map there is a single set of weights.

A single kernel is applied across the input. For each output feature map there is a single set of weights.

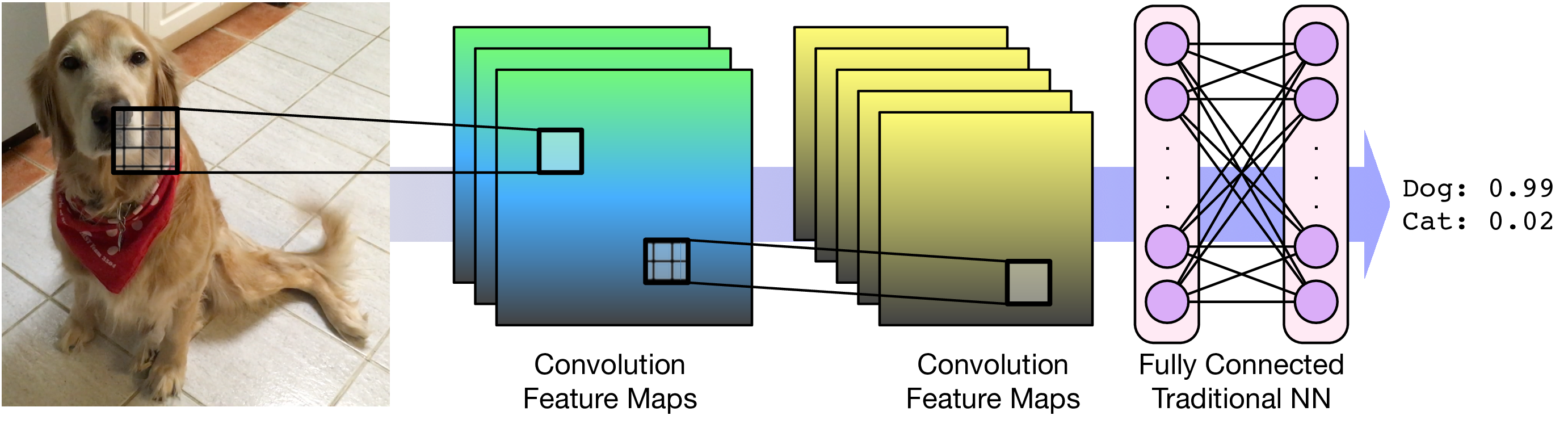

Convolutional Layers¶

For images, each pixel is an input feature. Each hidden layer is a set of feature maps.

Pooling¶

Pooling layers apply a fixed convolution (usually the non-linear MAX kernel). The kernel is usually applied with a stride to reduce the size of the layer.

- faster to train

- fewer parameters to fit

- less sensitive to small changes (MAX)

Consider an input image with 100 pixels. In a classic neural network, we hook these pixels up to a hidden layer with 10 nodes. In a CNN, we hook these pixels up to a convolutional layer with a 3x3 kernel and 10 output feature maps.

PyTorch Tensors¶

Tensor is very similar to numpy.array in functionality.

- Is allocated to a device (CPU vs GPU)

- Potentially maintains autograd information

tensor([[0.8278, 0.9498, 0.5665, 0.3035],

[0.4141, 0.8017, 0.0354, 0.1045],

[0.7318, 0.3452, 0.8140, 0.3527]])

(torch.Size([3, 4]), torch.float32, device(type='cpu'), False)

Modules vs Functional¶

Modules are objects that can be initialized with default parameters and store any learnable parameters. Learnable parameters can be easily extracted from the module (and any member modules). Modules are called as functions on their inputs.

Functional APIs maintain no state. All parameters are passed when the function is called.

A network is a module¶

To define a network we create a module with submodules for operations with learnable parameters. Generally use functional API for operations without learnable parameters.

MNIST¶

(<PIL.Image.Image image mode=L size=28x28>, 5)

Inputs need to be tensors...

tensor([[[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0118, 0.0706, 0.0706, 0.0706,

0.4941, 0.5333, 0.6863, 0.1020, 0.6510, 1.0000, 0.9686, 0.4980,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.1176, 0.1412, 0.3686, 0.6039, 0.6667, 0.9922, 0.9922, 0.9922,

0.9922, 0.9922, 0.8824, 0.6745, 0.9922, 0.9490, 0.7647, 0.2510,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.1922,

0.9333, 0.9922, 0.9922, 0.9922, 0.9922, 0.9922, 0.9922, 0.9922,

0.9922, 0.9843, 0.3647, 0.3216, 0.3216, 0.2196, 0.1529, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0706,

0.8588, 0.9922, 0.9922, 0.9922, 0.9922, 0.9922, 0.7765, 0.7137,

0.9686, 0.9451, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.3137, 0.6118, 0.4196, 0.9922, 0.9922, 0.8039, 0.0431, 0.0000,

0.1686, 0.6039, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0549, 0.0039, 0.6039, 0.9922, 0.3529, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.5451, 0.9922, 0.7451, 0.0078, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0431, 0.7451, 0.9922, 0.2745, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.1373, 0.9451, 0.8824, 0.6275,

0.4235, 0.0039, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.3176, 0.9412, 0.9922,

0.9922, 0.4667, 0.0980, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.1765, 0.7294,

0.9922, 0.9922, 0.5882, 0.1059, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0627,

0.3647, 0.9882, 0.9922, 0.7333, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.9765, 0.9922, 0.9765, 0.2510, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.1804, 0.5098,

0.7176, 0.9922, 0.9922, 0.8118, 0.0078, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.1529, 0.5804, 0.8980, 0.9922,

0.9922, 0.9922, 0.9804, 0.7137, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0941, 0.4471, 0.8667, 0.9922, 0.9922, 0.9922,

0.9922, 0.7882, 0.3059, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0902, 0.2588, 0.8353, 0.9922, 0.9922, 0.9922, 0.9922, 0.7765,

0.3176, 0.0078, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0706, 0.6706,

0.8588, 0.9922, 0.9922, 0.9922, 0.9922, 0.7647, 0.3137, 0.0353,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.2157, 0.6745, 0.8863, 0.9922,

0.9922, 0.9922, 0.9922, 0.9569, 0.5216, 0.0431, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.5333, 0.9922, 0.9922, 0.9922,

0.8314, 0.5294, 0.5176, 0.0627, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000],

[0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000, 0.0000,

0.0000, 0.0000, 0.0000, 0.0000]]])

torch.Size([1, 28, 28])

<matplotlib.image.AxesImage at 0x7f7c3c5e9520>

Training MNIST¶

[tensor([[[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]],

[[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]],

[[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]],

...,

[[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]],

[[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]],

[[[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]]]]),

tensor([7, 3, 2, 8, 2, 8, 9, 0, 3, 1])]

tensor([[-0.0046, 0.0154, 0.0583, -0.0139, 0.0108, 0.0126, 0.0472, -0.0524,

-0.1266, -0.0541],

[ 0.0627, 0.0350, -0.0288, 0.0551, 0.0666, 0.0135, 0.0770, -0.1265,

-0.1067, -0.0711],

[ 0.0107, -0.0808, -0.0220, 0.1164, 0.0692, 0.0104, 0.0578, -0.0578,

-0.0770, -0.1102],

[ 0.0007, -0.0244, -0.0380, 0.1365, 0.0560, 0.0264, 0.0675, -0.0926,

-0.0921, -0.0530],

[ 0.0243, -0.0152, 0.0004, 0.0329, 0.1065, -0.0140, 0.0985, -0.0977,

-0.0735, -0.0658],

[ 0.0297, 0.0019, -0.0382, 0.1322, 0.0352, 0.0180, 0.1035, -0.0995,

-0.0646, -0.0765],

[-0.0226, -0.0480, -0.0361, 0.0404, 0.0097, 0.0148, 0.0399, -0.0366,

-0.1430, -0.0075],

[ 0.0740, 0.0156, -0.0477, 0.1097, 0.1008, 0.0111, 0.0768, -0.0905,

-0.0431, -0.0928],

[ 0.0595, 0.0201, -0.0897, 0.0976, 0.0733, -0.0140, 0.0782, -0.1178,

-0.1070, -0.0730],

[-0.0364, -0.0495, -0.0353, 0.0535, 0.0161, 0.0208, 0.0147, -0.0673,

-0.0699, -0.0063]], device='cuda:0', grad_fn=<AddmmBackward0>)

Training MNIST¶

Our network takes an image (as a tensor) and outputs class probabilities.

- Need a loss

- Need an optimizer (e.g. SGD, ADAM)

backwarddoes not update parameters

tensor(2.3059, device='cuda:0', grad_fn=<NllLossBackward0>)

Training MNIST¶

Epoch - One pass through the training data.

CPU times: user 5min, sys: 25.4 s, total: 5min 26s Wall time: 6min 39s

[<matplotlib.lines.Line2D at 0x7f7c3de385b0>]

This is the batch loss.

Testing MNIST¶

Accuracy 0.9703

Some Failures¶

*Not from this particular network

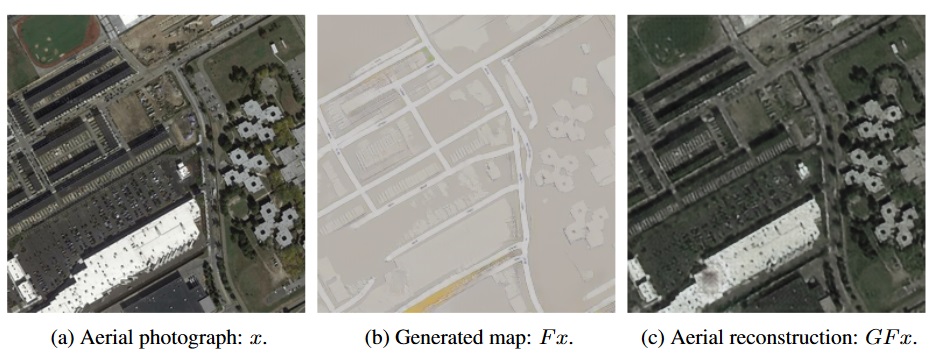

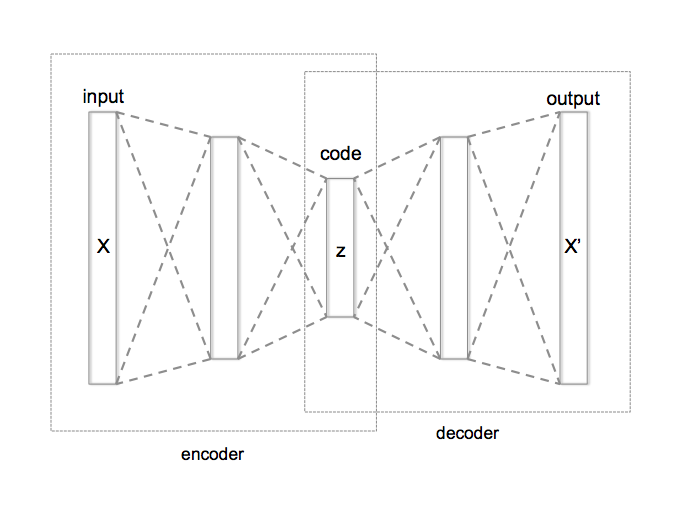

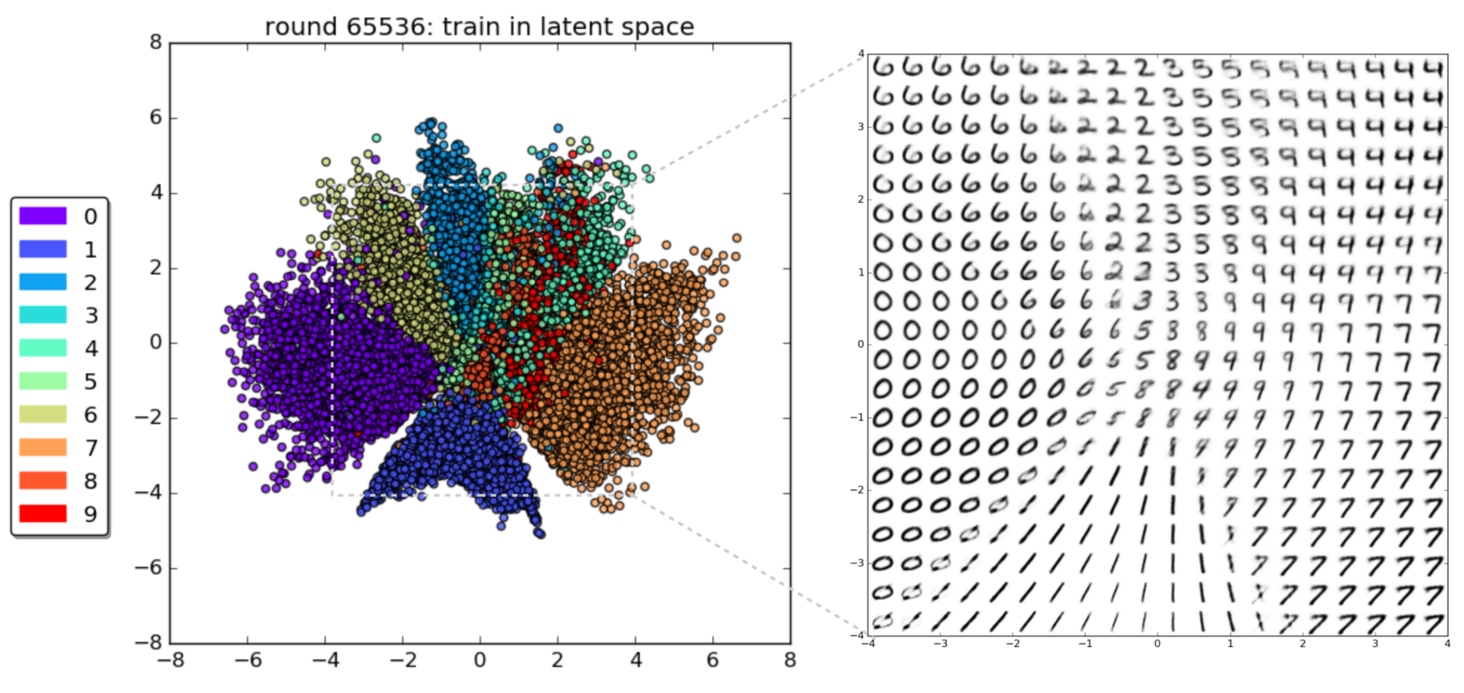

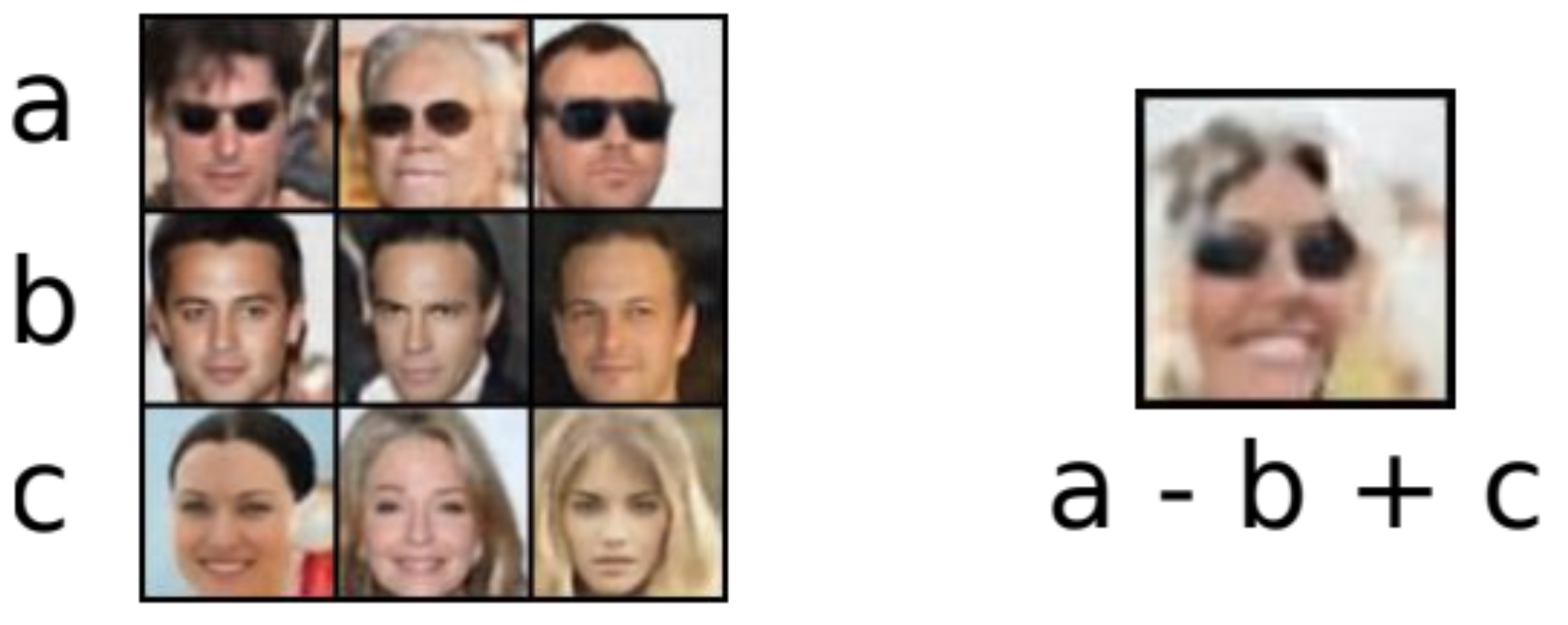

Generative vs. Discriminative¶

A generative model produces as output the input of a discriminative model: $P(X|Y=y)$ or $P(X,Y)$

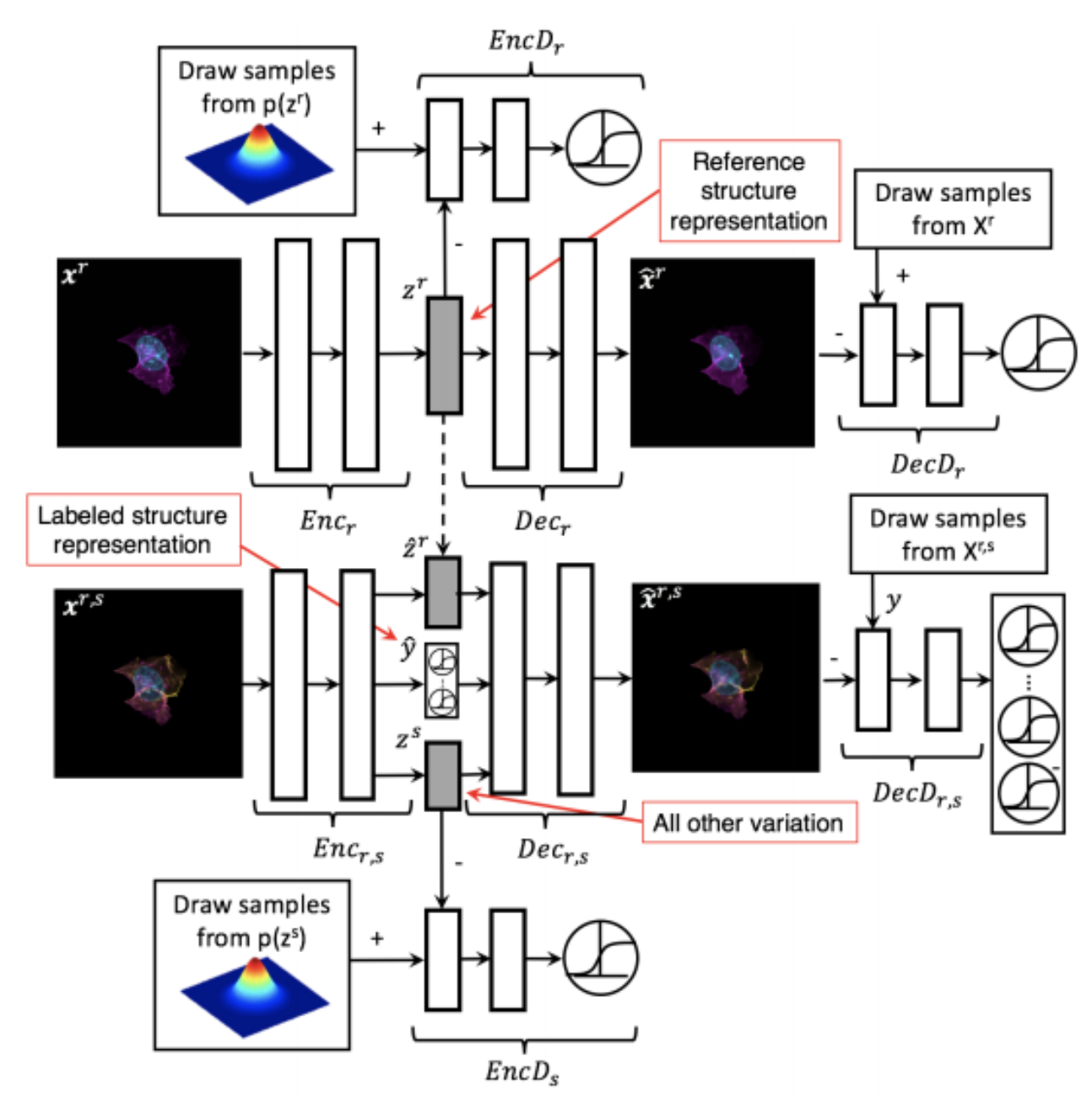

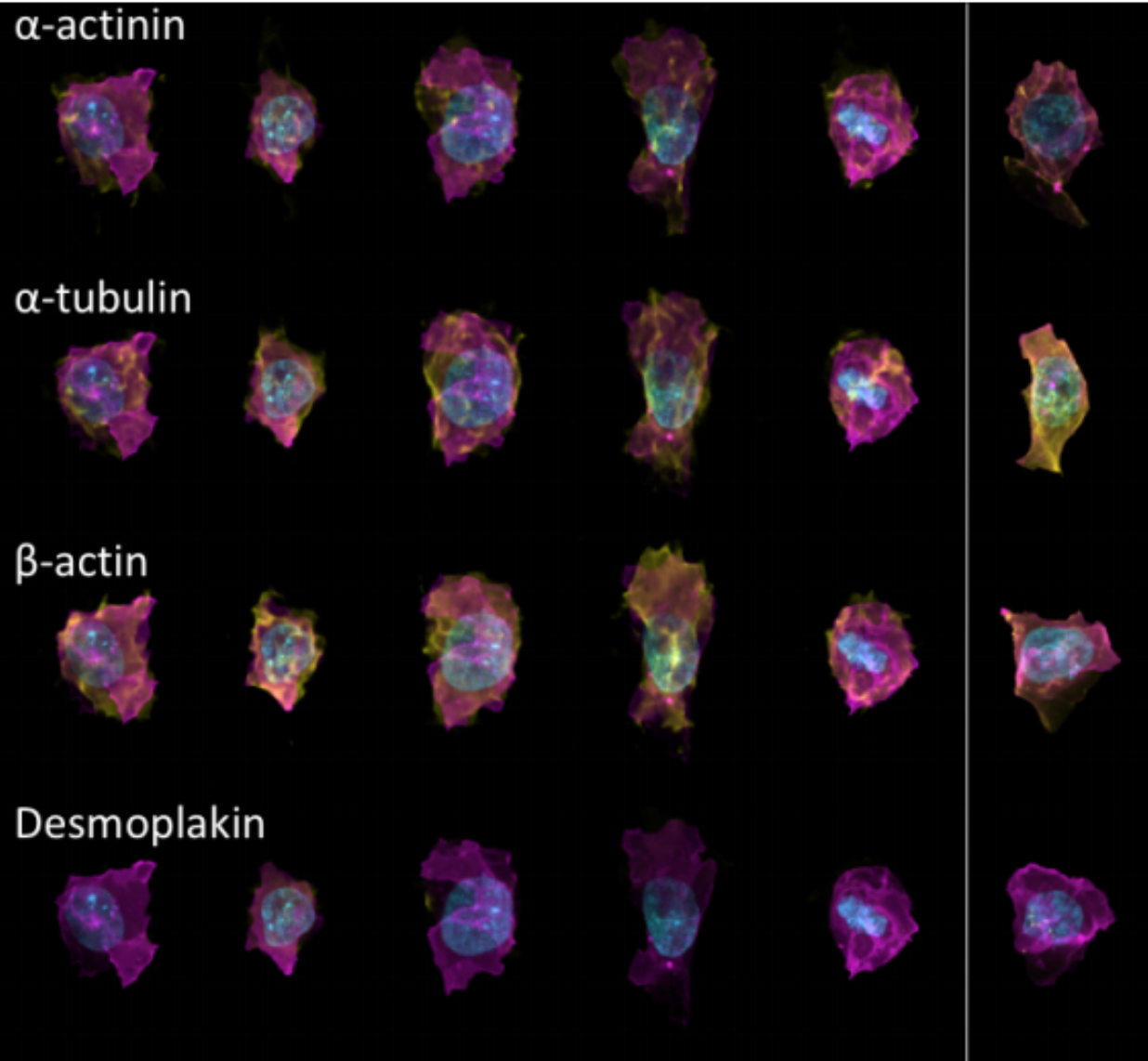

Generative Models of the Cell¶

https://arxiv.org/pdf/1705.00092.pdf

|

|

https://drive.google.com/file/d/0B2tsfjLgpFVhMnhwUVVuQnJxZTg/view

Generative Adversarial Networks¶

https://arxiv.org/abs/1406.2661

https://youtu.be/G06dEcZ-QTg

https://youtu.be/G06dEcZ-QTg

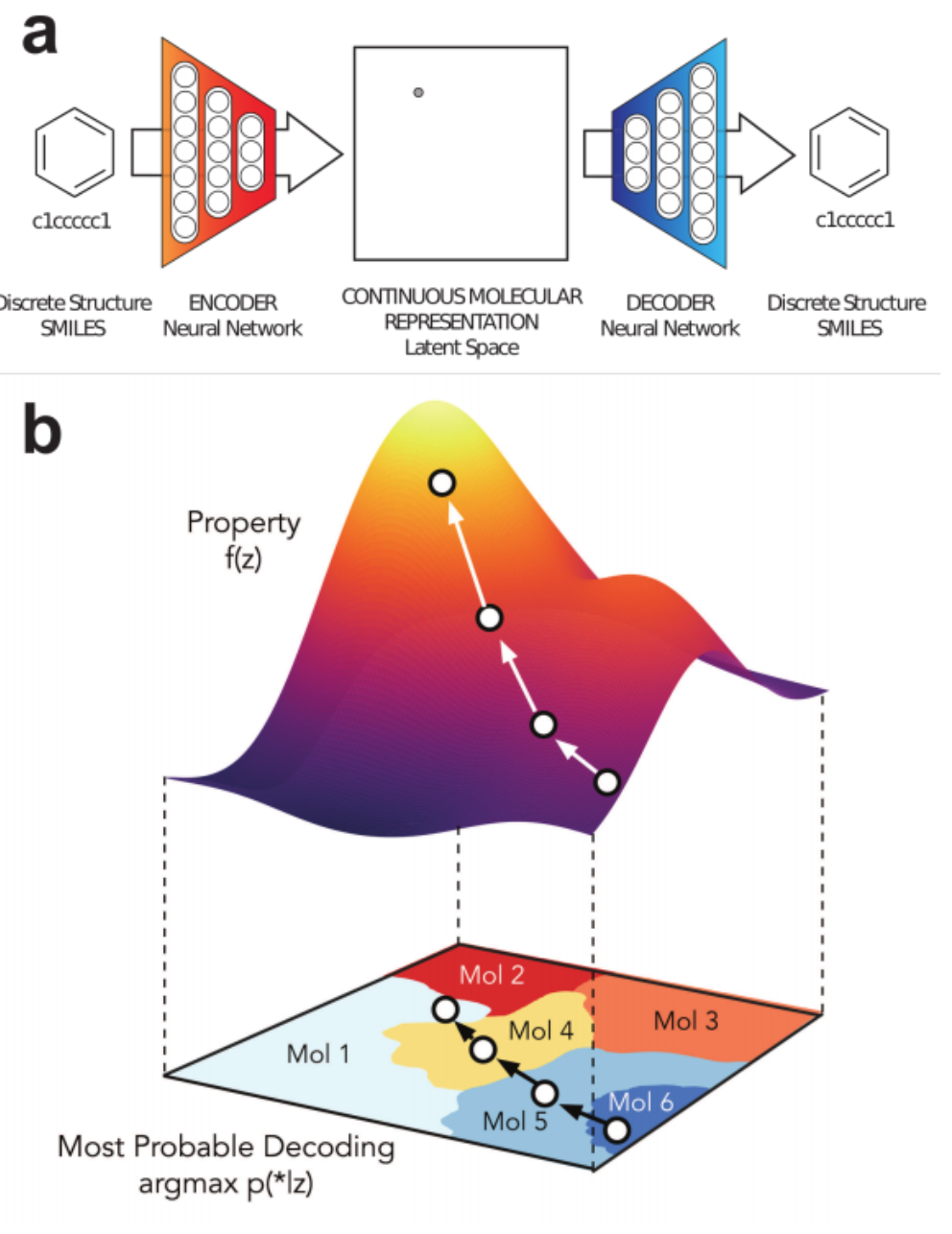

Deep learning is not profound learning.¶

But it is quite powerful and flexible.

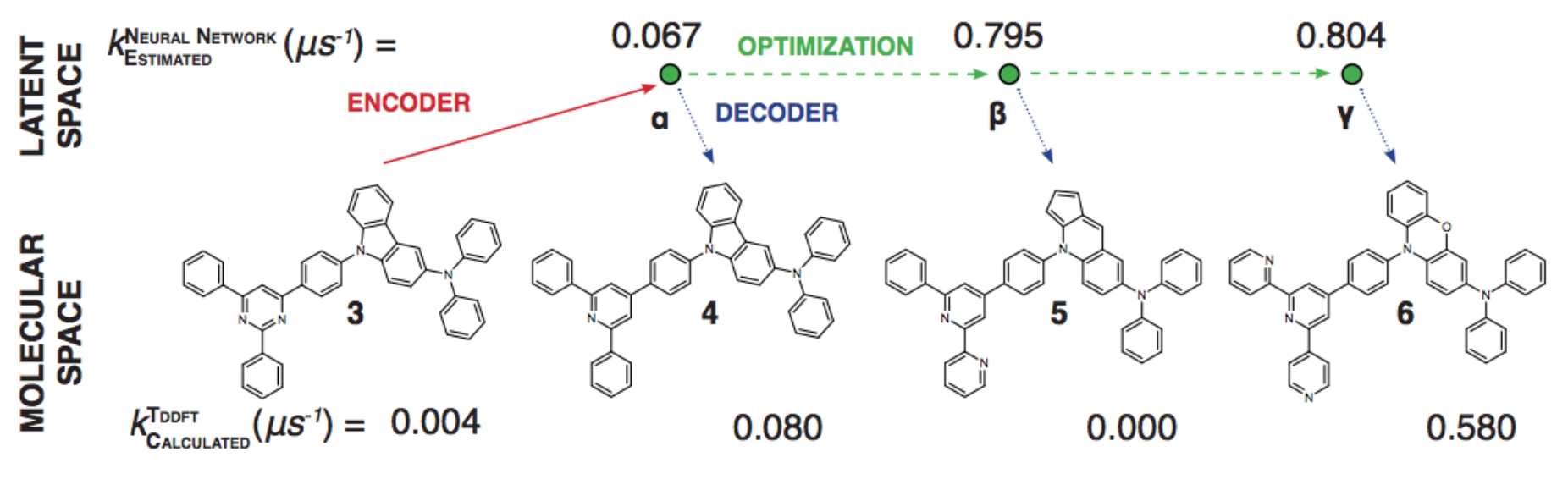

1% - 70% of output valid SMILES

1% - 70% of output valid SMILES